- #AWS EMR VS S3 COPY LOG FILES TO REDSHIFT FULL#

- #AWS EMR VS S3 COPY LOG FILES TO REDSHIFT TV#

- #AWS EMR VS S3 COPY LOG FILES TO REDSHIFT DOWNLOAD#

SnowflakeAccount _SFCRole= snowflakeRoleId _ randomId AWS_EXTERNAL_IDĪ unique ID assigned to the specific stage. This user is the same for every external S3 stage created in your account. Record the values for the SNOWFLAKE_IAM_USER and AWS_EXTERNAL_ID properties, where: SNOWFLAKE_IAM_USERĪn AWS IAM user created for your Snowflake account. | STAGE_CREDENTIALS | AWS_ROLE | String | arn:aws:iam::001234567890:role/mysnowflakerole | | | STAGE_CREDENTIALS | AWS_EXTERNAL_ID | String | MYACCOUNT_SFCRole=2_jYfRf+gT0xSH7G2q0RAODp00Cqw= | | | STAGE_CREDENTIALS | SNOWFLAKE_IAM_USER | String | arn:aws:iam::123456789001:user/vj4g-a-abcd1234 | | + -+-+-+-+-+

In others, you will be better off storing your data in Amazon Redshift dimensional data star schemas. In some instances, you may want to keep some data in Spectrum.

#AWS EMR VS S3 COPY LOG FILES TO REDSHIFT FULL#

This means a partition, as opposed to the full dataset, can be tackled by multiple nodes improving processing times and reducing cost. Partitioning your data allows you to place in sensible breakpoints, based on the data, that split up the data into logical chunks. This will create a new table with the aggregated/joined data.įinally, we can take our new table and write it back to S3 if required, using the Rewrite Table or Rewrite External Table components. This means you should filter and aggregate in Spectrum before you start joining data, which can be handled in Amazon Redshift. The best performance comes from taking the load off Amazon Redshift. Spectrum is fantastic at filtering and aggregating very large datasets. ⇨ Tip: Aggregate and Filter in Spectrum.Using Matillion ETL for Amazon Redshift, we can build and trigger a job to read the data and combine it with data stored on Amazon Redshift. This is because the data has to be read into Amazon Redshift in order to transform the data. However, the storage benefits will result in a performance trade-off.

S3 offers cheap and efficient data storage, compared to Amazon Redshift. The data can then be streamed to S3 and a bucket, which can then be read by Spectrum when we execute a job.

#AWS EMR VS S3 COPY LOG FILES TO REDSHIFT TV#

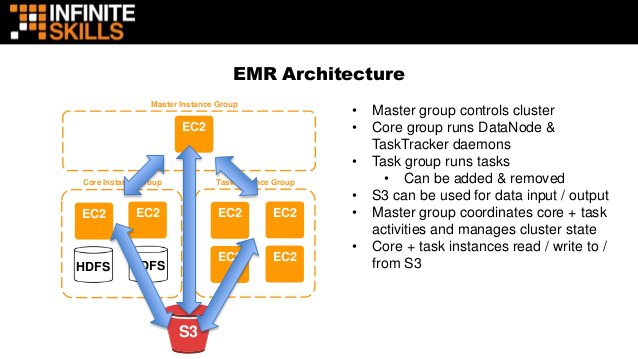

The diagram below maps out the journey of IoT data generation to visualization and analysis of that data.ĭata is collected by devices, such as Amazon Alexa, Echo or Fire TV Stick, and streamed into S3 via Kinesis Firehose.īy staging the data in S3 and accessing it via Spectrum, there is no data loading time since the data stays on S3.

#AWS EMR VS S3 COPY LOG FILES TO REDSHIFT DOWNLOAD#

For an extensive list of where to store what data typs, download our eBook Amazon Redshift Spectrum: Expert Tips for Maximizing the Power of Spectrum. In this blog, we will walk you through an example of using IoT device data. Therefore, to make the most of these benefits, some data is best stored on Amazon Redshift, while other data is better on S3 and accessed via Spectrum. This is because you will probably want to store data in both locations! This is because data storage can impact performance and costs when querying that data. If you are employing a data lake using Amazon Simple Storage Solution (S3) and Spectrum alongside your Amazon Redshift data warehouse, you may not know where is best to store your data.

0 kommentar(er)

0 kommentar(er)